Daniil DmitrievI am a postdoc at the University of Pennsylvania, hosted by Yuting Wei in the Department of Statistics and Data Science at the Wharton School. My research interests lie at the intersection of mathematics, machine learning, and theoretical computer science, with a current focus on understanding the interplay between structure and randomness in algorithm analysis. I completed my PhD at ETH Zurich, advised by Afonso Bandeira and Fanny Yang, and supported by the ETH AI Center and ETH FDS initiative. In Fall 2024, I visited Simons Institute for the Theory of Computing at UC Berkeley, for the program Modern paradigms in generalization. I have a MS from EPFL, where I worked with Lenka Zdeborová and Martin Jaggi, and a BS from MIPT, where I worked with Maksim Zhukovskii. Contact me at: dmitrievdaniil97@gmail.com. |

|

|

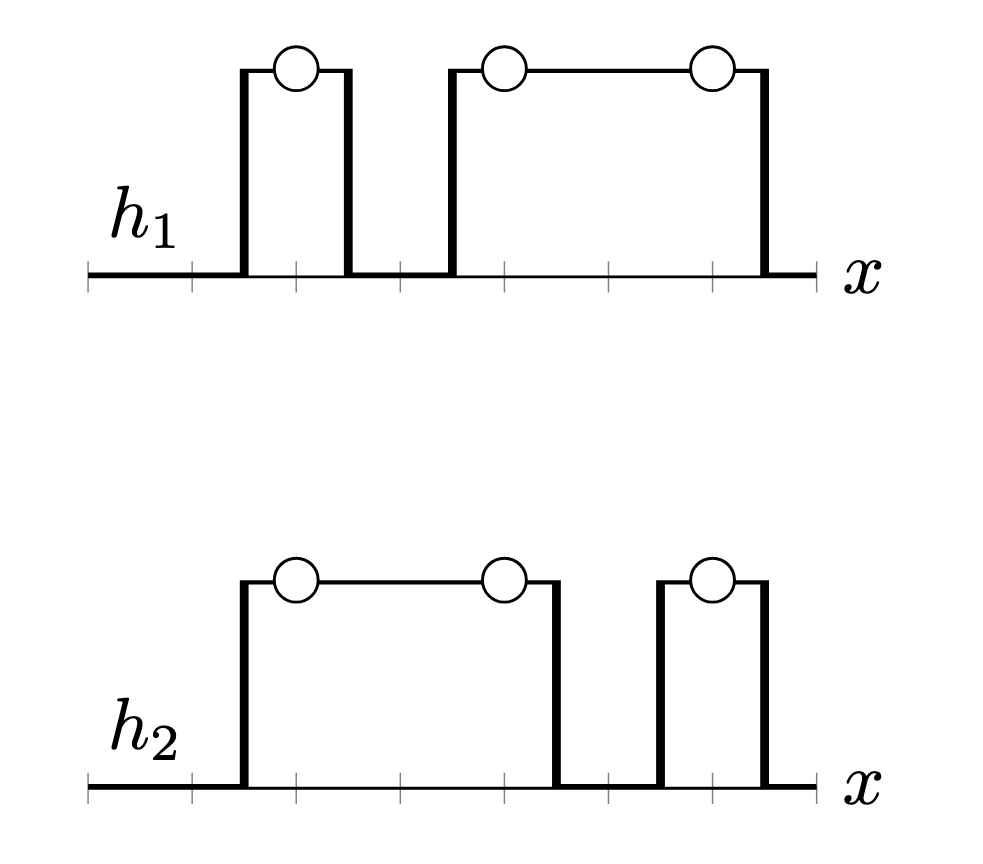

Learning in an echo chamber: online learning with replay adversaryDD, Harald Eskelund Franck, Carolin Heinzler, Amartya Sanyal (αβ order) preprint, 2025 arxiv / We propose a new online learning variant in which the learner might receive samples labelled via its own past models. Our results include upper and lower bounds against both adaptive and stochastic adversaries. |

|

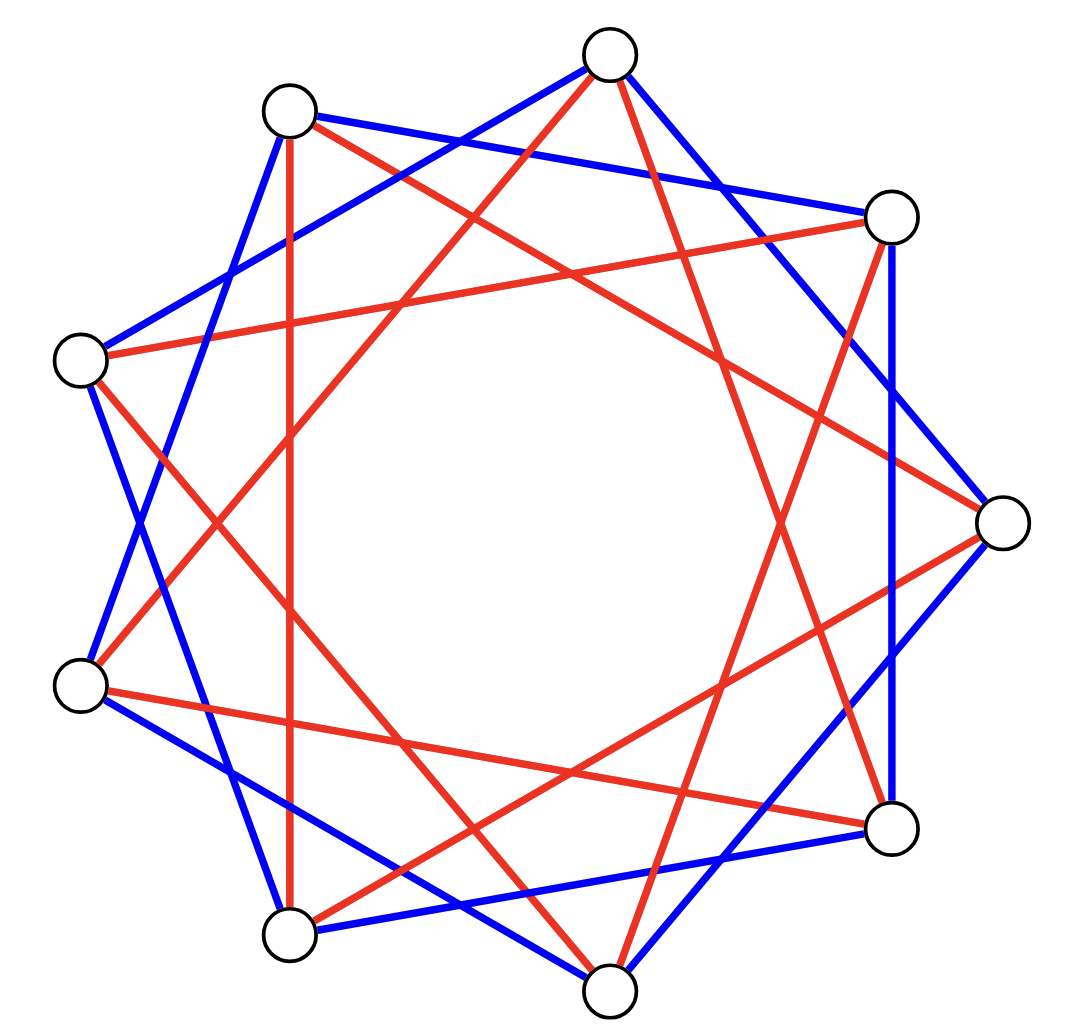

The Lovász number of random circulant graphsAfonso S. Bandeira, Jarosław Błasiok, DD, Ulysse Faure, Anastasia Kireeva, Dmitriy Kunisky (αβ order) SampTA, 2025 arxiv / We provide lower and upper bounds for the expected value of the Lovász theta number of random circulant graphs. Circulant graphs are a class of structured graphs where connectivity depends only on the difference between the vertex labels. |

|

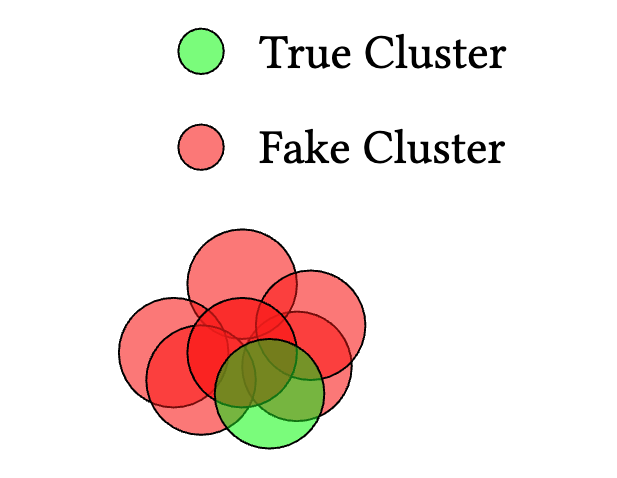

Robust mixture learning when outliers overwhelm small groupsDD*, Rares-Darius Buhai*, Stefan Tiegel, Alexander Wolters, Gleb Novikov, Amartya Sanyal, David Steurer, Fanny Yang NeurIPS, 2024 arxiv / poster / We propose an efficient meta-algorithm for recoving means of a mixture model in the presence of large additive contaminations. |

|

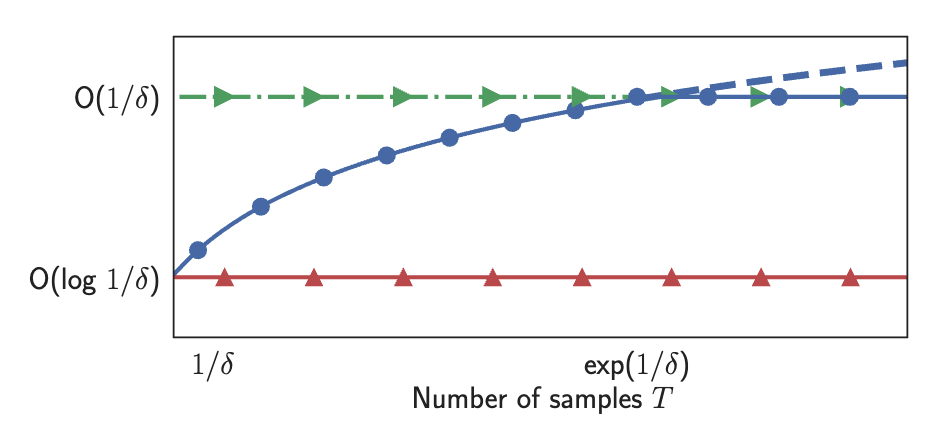

On the growth of mistakes in differentially private online learning: a lower bound perspectiveDD, Kristof Szabo, Amartya Sanyal COLT, 2024 arxiv / poster / We prove that for a certain class of algorithms, the number of mistakes for online learning under differential privacy constraint must grow logarithmically. |

|

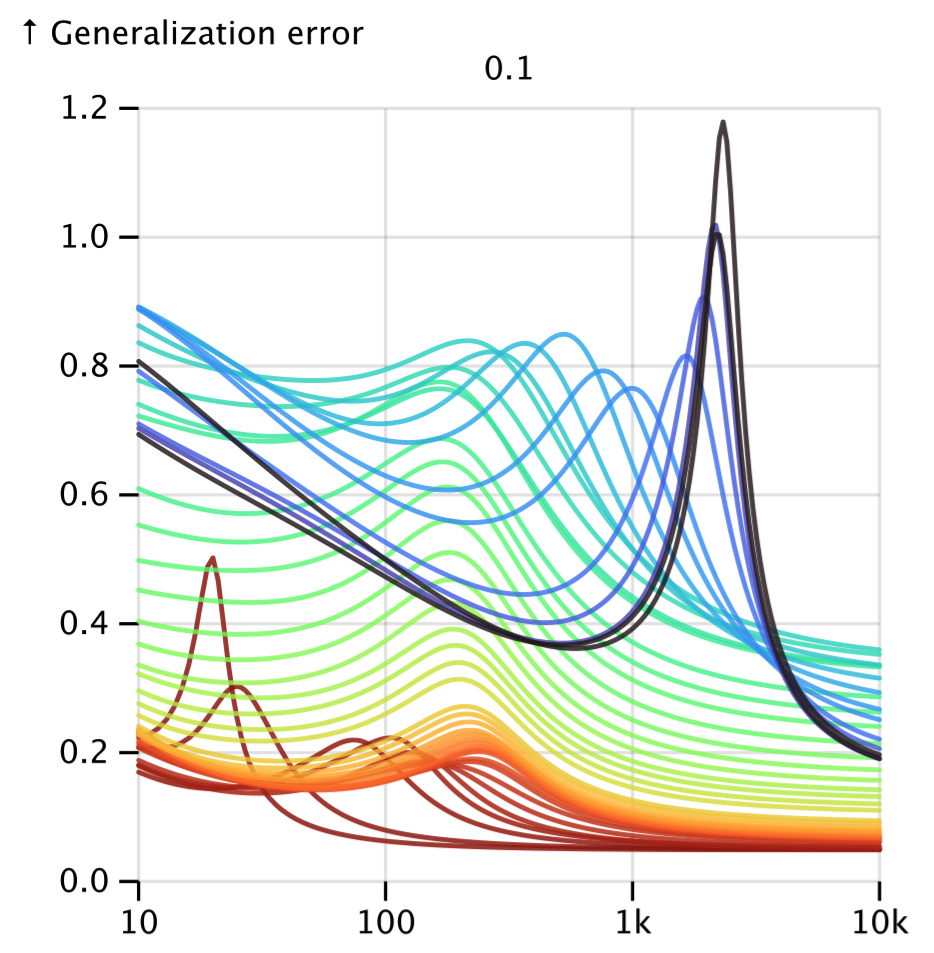

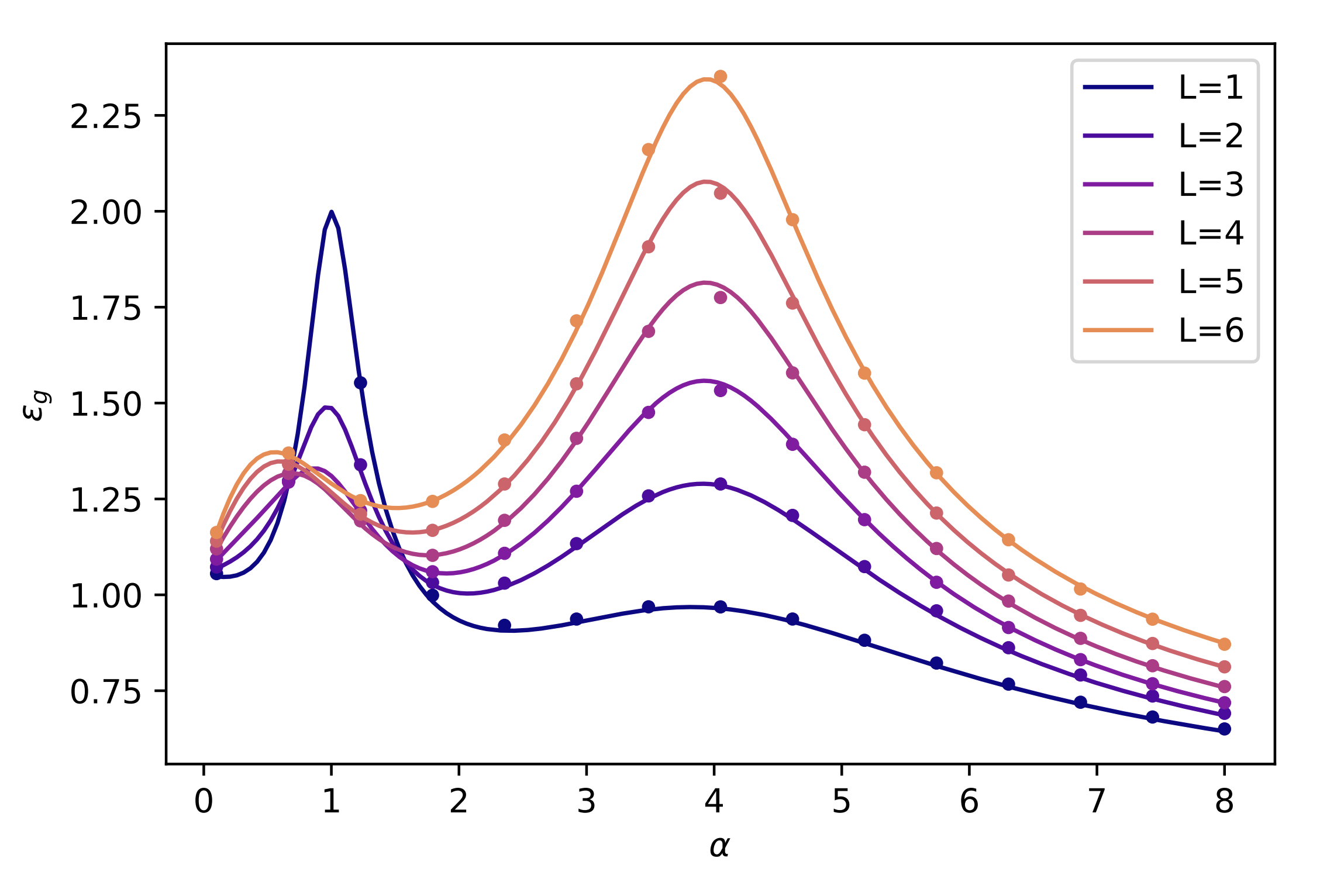

Asymptotics of learning with deep structured (random) featuresDominik Schröder*, DD*, Hugo Cui*, Bruno Loureiro ICML, 2024 arxiv / poster / We derive a deterministic equivalent for the generalization error of general Lipschitz functions. Furthermore, we prove a linearization for a sample covariance matrix of a structured random feature model with two hidden layers. |

|

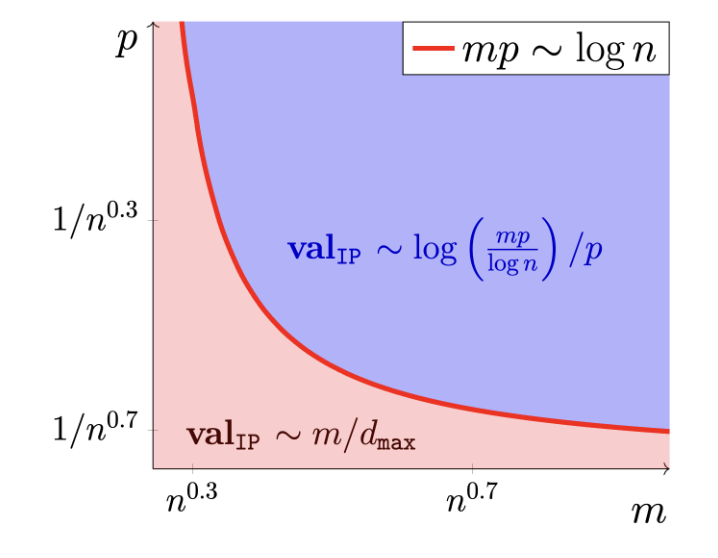

Greedy heuristics and linear relaxations for the random hitting set problemGabriel Arpino, DD, Nicolo Grometto (αβ order) APPROX, 2024 arxiv / We prove that the standard greedy algorithm is order-optimal for the hitting set problem in the random bernoulli case. |

|

Deterministic equivalent and error universality of deep random features learningDominik Schröder, Hugo Cui, DD, Bruno Loureiro ICML, 2023 arxiv / video / poster / We rigorously establish Gaussian universality for the test error in ridge regression in deep networks with frozen intermediate layers. |

|

On monotonicity of Ramanujan function for binomial random variablesDD, Maksim Zhukovskii (αβ order) Statistics & Probability Letters, 2021 arxiv / We analyze properties of the CDF of binomial random variable near its median. |

|

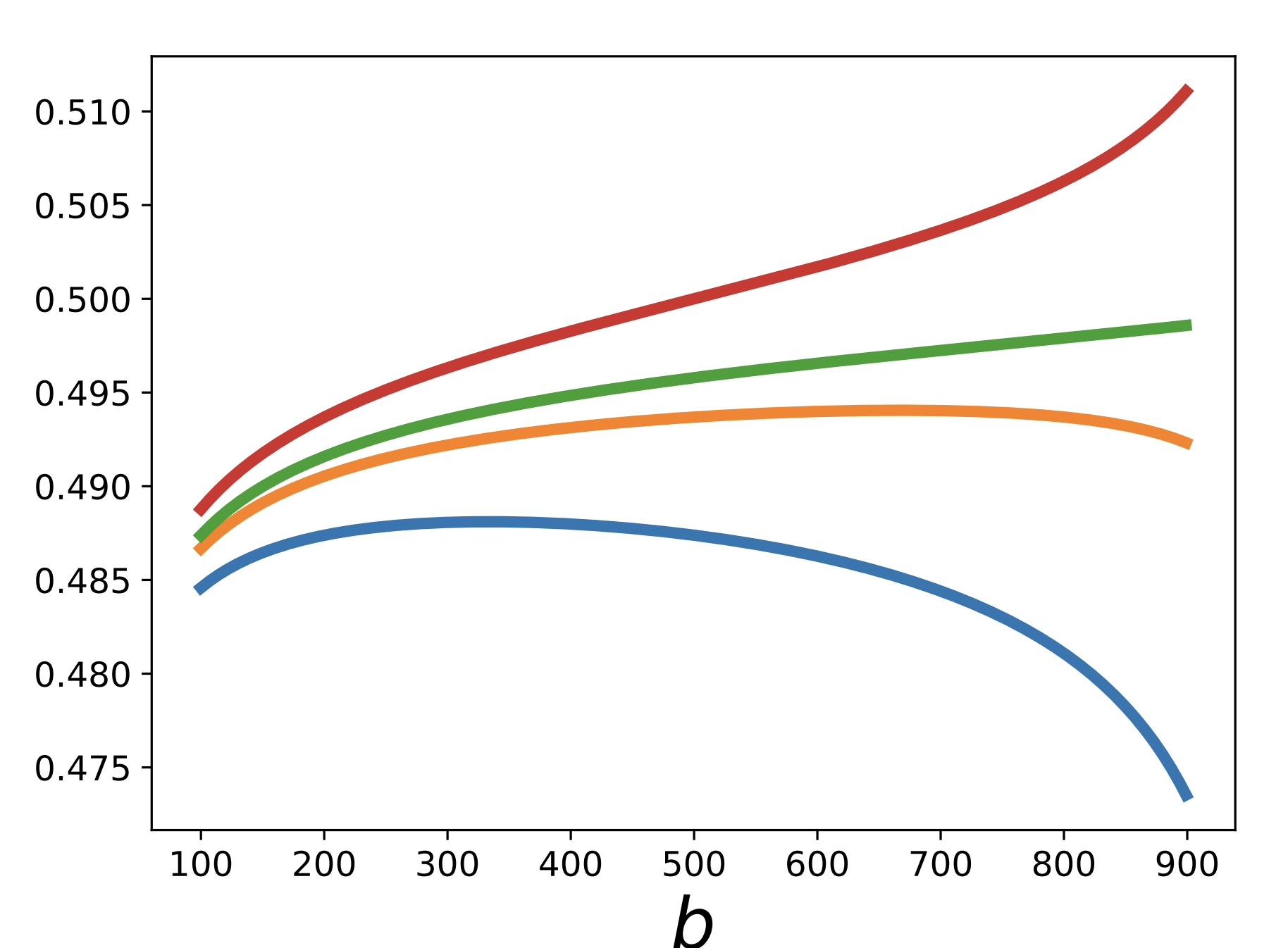

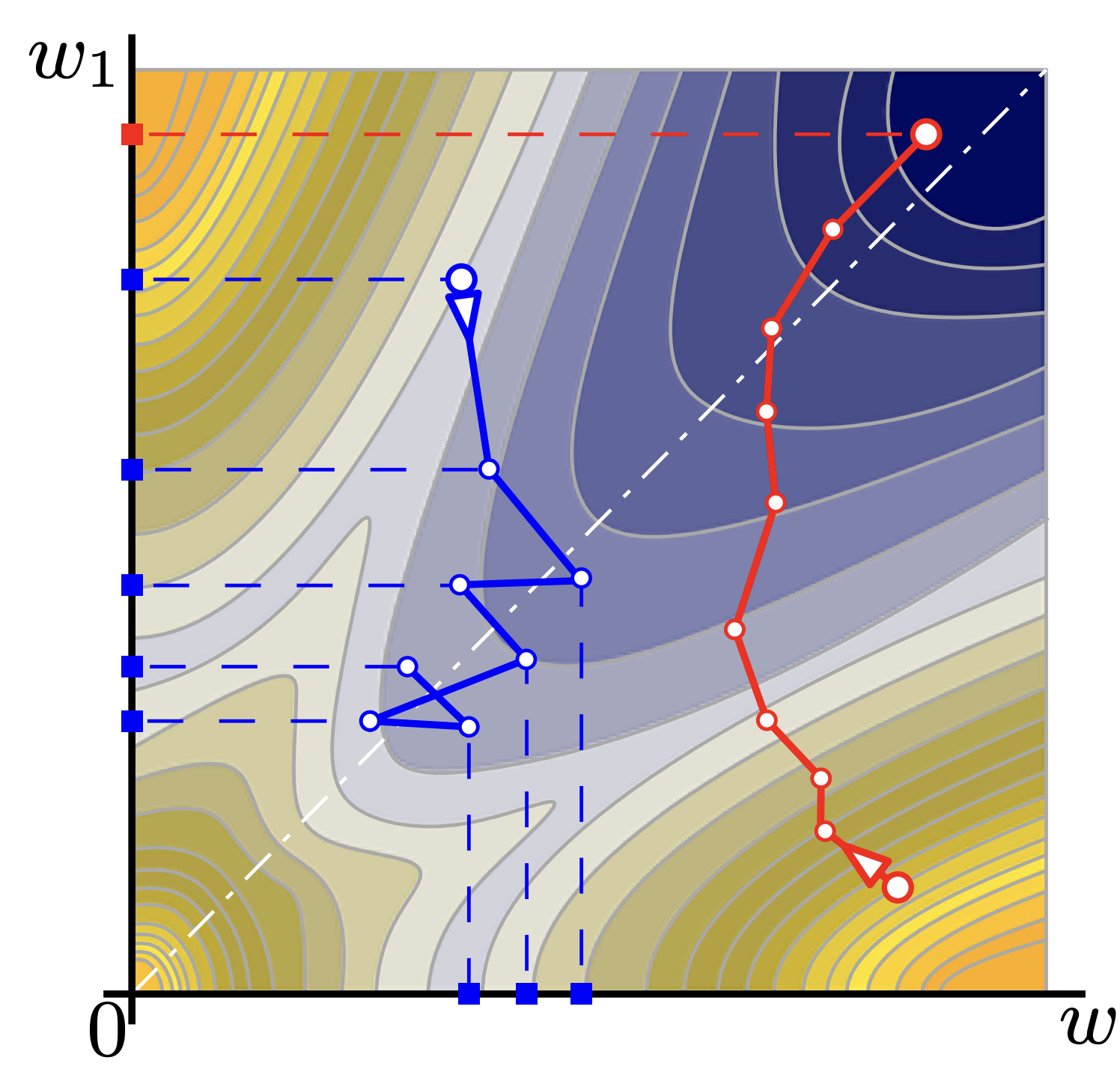

Dynamic model pruning with feedbackTao Lin, Sebastian U Stich, Luis Barba, DD, Martin Jaggi ICLR, 2020 arxiv / We propose a novel model compression method that generates a sparse trained model without additional overhead. |

Invited Talks |

| DACO seminar, ETH Zurich, 2024 website |

| Youth in High Dimensions, ICTP, Trieste, 2024 video |

| Delta seminar, University of Copenhagen, 2024 website |

| Graduate seminar in probability, ETH Zurich, 2023 |

| Workshop on Spin Glasses, Les Diablerets, 2022 video |

Teaching and Service |

ETH Zurich, TA: Mathematics of Data Science (Fall 2021), Mathematics of Machine Learning (Spring 2022)

EPFL, TA: Artificial Neural Networks (Spring 2020, Spring 2021)

Supervising MSc theses at ETH Zurich: Carolin Heinzler (Fall 2023), Krish Agrawal, Ulysse Faure (Spring 2024)

Berkeley Math Circle (Fall 2024)

Reviewer: NeurIPS 2024 (Top reviewer), ICLR 2025